How are we solving LLM challenges for businesses?

Markovate leverages advanced algorithms and data-driven insights to deliver unparalleled accuracy and relevance. With a keen focus on data security, model architecture, model evaluation, data quality and MLOps management, we can develop a highly competitive LLM-driven solutions for our clients.

Preprocess the data

We understand that the data may not be always ready for us, so we use techniques like imputation, outlier detection and data normalization to preprocess the data effectively and to remove noise and inconsistencies. Our AI engineers also do feature engineering based on domain knowledge and experimentation to enhance the power of the AI model.

Data security

Our AI engineers use role-based access control (RBAC) and implement multi-factor authentication (MFA) for data security. They adhere to strong encryption techniques to protect sensitive data and use encryption protocols such as SSL/TLS for data transmission and AES for data storage. Additionally, they apply robust access control mechanisms to restrict access to sensitive data only to authorized users. We also build data cluster to store the data locally in your region.

Evaluation of Models

We use cross-validation techniques such as k-fold cross-validation to evaluate the performance of AI models. This involves splitting the data into multiple subsets and training the model on different combinations of subsets to assess its performance based on accuracy, precision, recall, F1 score and ROC curve. We also give great importance to Hyperparameter tuning and use different model architectures to optimize the model performance that align with the specific objectives and requirements of the LLM solution.

MLOps Management

Our MLOps will help in automation of key ML lifecycle processes to optimize the deployment, training and data processing costs. We use techniques like data ingestion, tools like Jenkins, GitLab CI and framework like RAG to continuously do cost-impact analysis and for building a low-cost solution for your business. Our team also does infrastructure orchestration to manage resources and dependencies to ensure consistency and reproducibility across environments.

Production-grade model scalability

Large models require significant computational resources, therefore we optimize the model for better performance without sacrificing output quality. For scalability, we use techniques like quantization, pruning and distillation to support growing number of requests. We also balance the need for additional resources with cost considerations, potentially through cost-optimized resource allocation or by identifying the most cost-effective scaling strategies.

SERVICES

Our Large Language Model (LLM) Development Services

Large language model has a potential to revolutionize various industries by automating and optimization workflows.

LLM Consulting and Strategy Building

Large Language Model (LLM) Development

LLM Fine-tuning

Large Language Model Integration

LLM Support & Maintenance

Before developing a large language model tailored to our client’s specific needs, we provide guidance on the most efficient LLM development path to keep costs and time to a minimum. We aim to assist in formulating an implementation plan and strategy for the LLM development process depending on the specific business use case and industry requirements.

We specialize in fine-tuning large language models (LLMs) like GPT, Mistral, Gemini, Llama, optimizing their proficiency through strategic adjustments and training on diverse datasets. Our AI engineers are proficient in enhancing performance for nuanced language understanding, ensuring adaptability to specialized domains.

LLM Model Development

We can help you develop a deep learning model that can generate natural language test by predicting the next word in the sequence of words. We can also train or fine-tune the model on a large dataset so it can learn patters and structures of language.

LLM Model Consulting

Before developing an AI-powered model tailored to our clients’ specific needs, we provide guidance on the most efficient LLM development path to keep costs and time to a minimum. Our aim is to assist in formulating an implementation plan and strategy for the LLM development process.

LLM Model Integration

Our developers can help with integration of LLM models with your existing content management systems or product. We check for model performance and make sure LLM model is fully-trained to give an accurate result for a specific task.

LLM Model Support & Maintenance

Our team offers ongoing support and maintenance services to ensure the NLP-based solution consistently delivers accurate results. We monitor and optimize the model’s performance to enable self-learning capabilities, allowing for continuous improvement of outcomes.

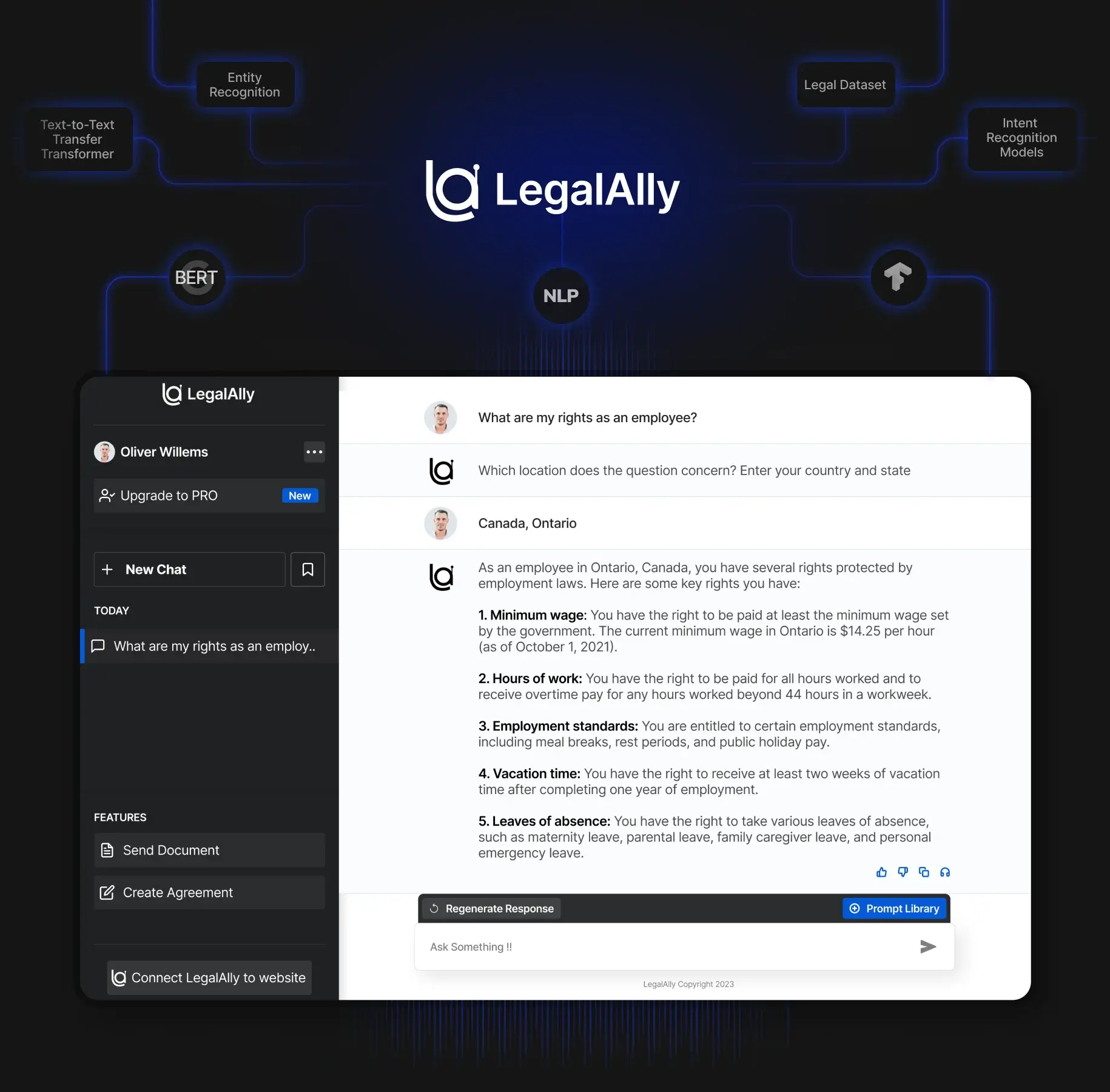

Digital Transformation Accelerator

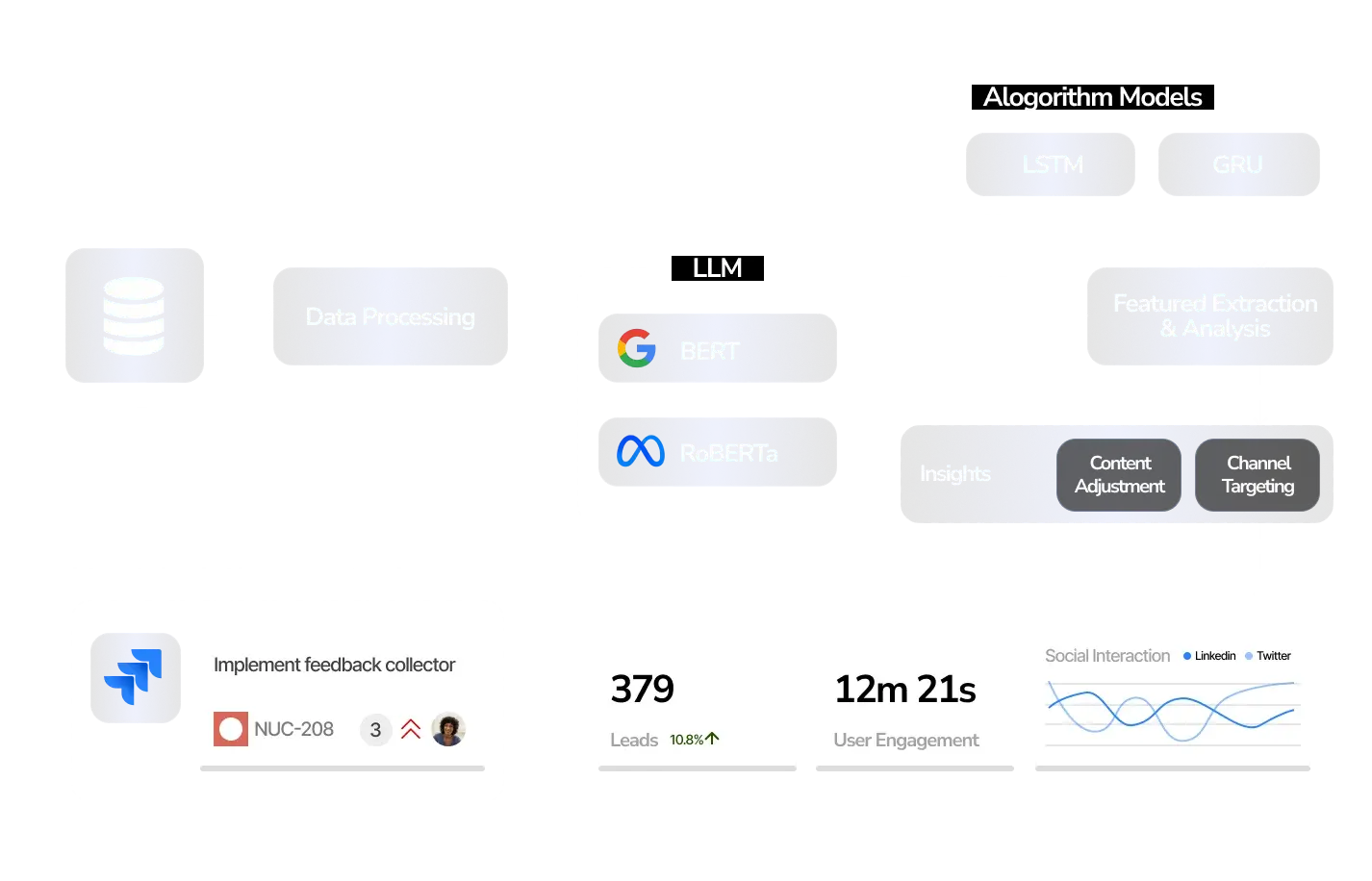

Harnessing advanced language understanding models such as BERT and RoBERTa, we engineered a Digital Transformation Accelerator. Our team of AI/ML experts implemented predictive insights into every stage of the transformation journey, employing state-of-the-art algorithms like LSTM and GRU. Finally, we seamlessly integrated the solution into popular project management tools like Jira and collaboration platforms such as Slack, fostering cross-functional teamwork, and significantly expediting the pace of digital transformation.

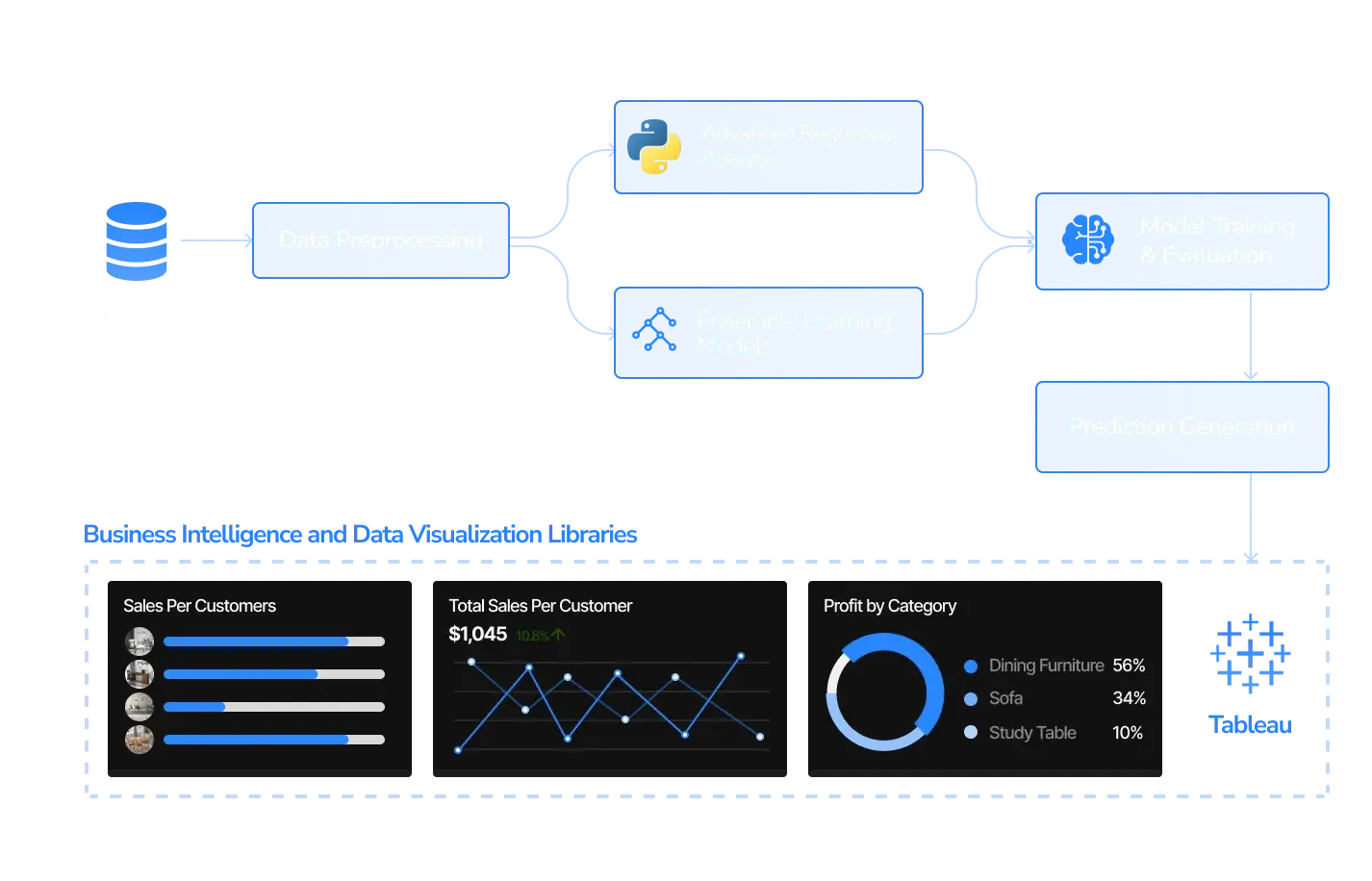

Predictive Analytics Platform for Business Insights

Our predictive analytics platform delivers unparalleled accuracy and depth of insights utilizing the power of proprietary language model T5. Our solution employs advanced regression analysis and ensemble learning techniques to generate precise forecasts across diverse business domains. We integrated the solution with business intelligence tools like Tableau and data visualization libraries like Matplotlib to help empower organizations to explore and visualize predictive insights interactively. With our solution, making data-driven decisions becomes intuitive and informed, driving business growth and success.

Intelligent Process Optimization Solution

We utilized the state-of-the-art language model GPT-4 to develop a solution that analyzes textual descriptions of business processes to extract insights. Our AI/ML engineers leveraged machine learning algorithms like BERT and T5, which help the solution identify inefficiencies and recommend optimization strategies such as process automation and resource allocation. We ensured a seamless implementation through integration with tools like TensorFlow and Apache Airflow, maximizing operational efficiency and productivity.

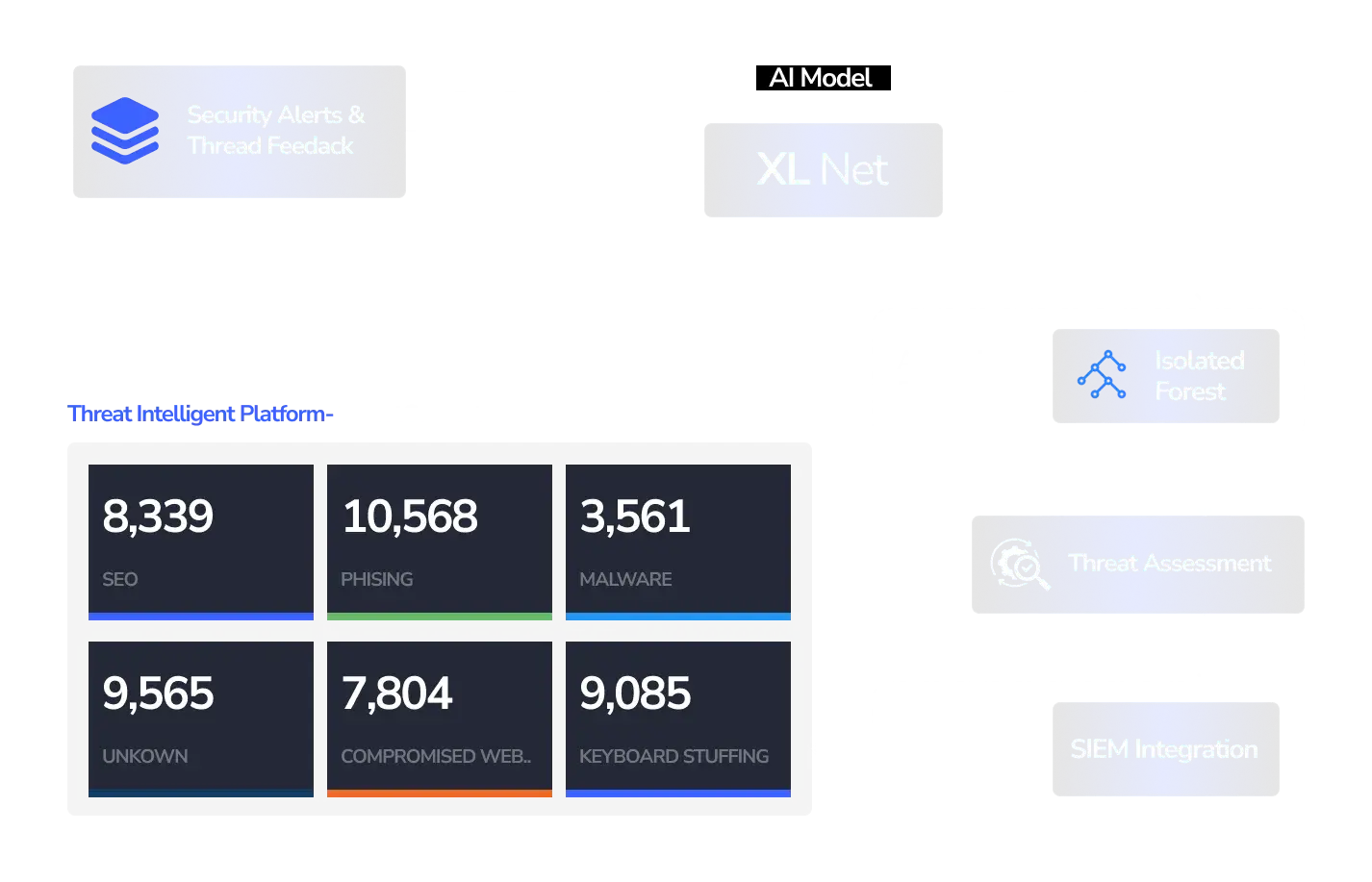

AI-Powered Cybersecurity Defense Platform

We developed a formidable cybersecurity defense platform that operates in real-time using a cutting-edge AI model, XLNet. The solution analyzes security alerts and threat intelligence feeds, ensuring proactive threat detection. Our team of engineers employed anomaly detection techniques such as Isolation Forest and One-Class SVM, making the solution capable of swiftly responding to suspicious activities. We integrated leading security information and event management (SIEM) systems like Splunk and threat intelligence platforms such as ThreatConnect, providing robust defense against evolving threats and safeguarding digital assets with unparalleled efficiency.

PROCESS

What is our process for building LLM-driven solutions

Data Preparation

Before we use any data, we help organizations clean, organize, and transform raw data into a format suitable for training. This may include normalizing or standardizing numerical data, encoding categorical data, and generating new features through various transformations to enhance model performance.

Data Pipeline

After gathering diverse and relevant datasets for training the model, we want to ensure data quality and relevance. Our team pre-processes the data and transforms it using techniques like data normalization, feature engineering, and imputation to minimize the data maintenance cost. Then we enhance the dataset and do data versioning to track changes and ensure reproducibility.

Experimentation

Based on the project requirements and objectives, we choose the appropriate architecture model such as Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), or Transformer models. Once we select the model, we train the selected model using the preprocessed quality data and evaluate it on performance metrics such as accuracy and relevance.

Data Evaluation

We rigorously evaluate the quality and relevance of the processed data to confirm its suitability for training. Leveraging advanced data evaluation tools like Guardrails, MLflow, and Langsmith, we conduct thorough assessment and validation processes. Additionally, we implement RAG techniques designed to detect and mitigate hallucinations within the generated outputs. We ensure that the model maintains high levels of groundedness and fidelity to the training data, minimizing the risk of producing inaccurate or misleading results.

Deployment

Once we have a trained model ready and any necessary dependencies into a deployable format, we deploy it to the production environment using platforms like TensorFlow, AWS SageMaker, or AzureML. Finally, we implement a monitoring system to track the model performance in production. We gather the user feedback and through the feedback loop, we improve the model over time.

Prompt Engineering

We define clear and concise prompts or input specifications for generating desired outputs from the LLM. We experiment with different prompt formats and styles to optimize model performance and output quality. And eventually integrate prompts seamlessly into the user interface or application workflow, providing users with intuitive controls and feedback mechanisms.

Data Preparation

Before we use any data, we help the organization with cleaning, organizing, and transforming raw data into a format that is suitable for training. This may include normalizing or standardizing numerical data, encoding categorical data, and generating new features through various transformations to enhance model performance.

Data Pipeline

After gathering diverse and relevant datasets for training the model, we want to ensure data quality and relevance. Our team pre-processes the data and transforms it using techniques like data normalization, feature engineering, and imputation to minimize the data maintenance cost. Then we enhance the dataset and do data versioning to track changes and ensure reproducibility.

Experimentation

Based on the project requirements and objectives, we choose the appropriate architecture model such as Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), or Transformer models. Once we select the model, we train the selected model using the preprocessed quality data and evaluate it on performance metrics such as accuracy and relevance.

Data Evaluation

We rigorously evaluate the quality and relevance of the processed data to confirm its suitability for training. Leveraging advanced data evaluation tools like Guardrails, MLflow, and Langsmith, we conduct thorough assessment and validation processes. Additionally, we implement RAG techniques designed to detect and mitigate hallucinations within the generated outputs. We ensure that the model maintains high levels of groundedness and fidelity to the training data, minimizing the risk of producing inaccurate or misleading results.

Deployment

Once we have a trained model ready and any necessary dependencies into a deployable format, we deploy it to the production environment using platforms like TensorFlow, AWS SageMaker, or AzureML. Finally, we implement a monitoring system to track the model performance in production. We gather the user feedback and through the feedback loop, we improve the model over time.

Prompt Engineering

We define clear and concise prompts or input specifications for generating desired outputs from the AI model. We experiment with different prompt formats and styles to optimize model performance and output quality. And eventually integrate prompts seamlessly into the user interface or application workflow, providing users with intuitive controls and feedback mechanisms.

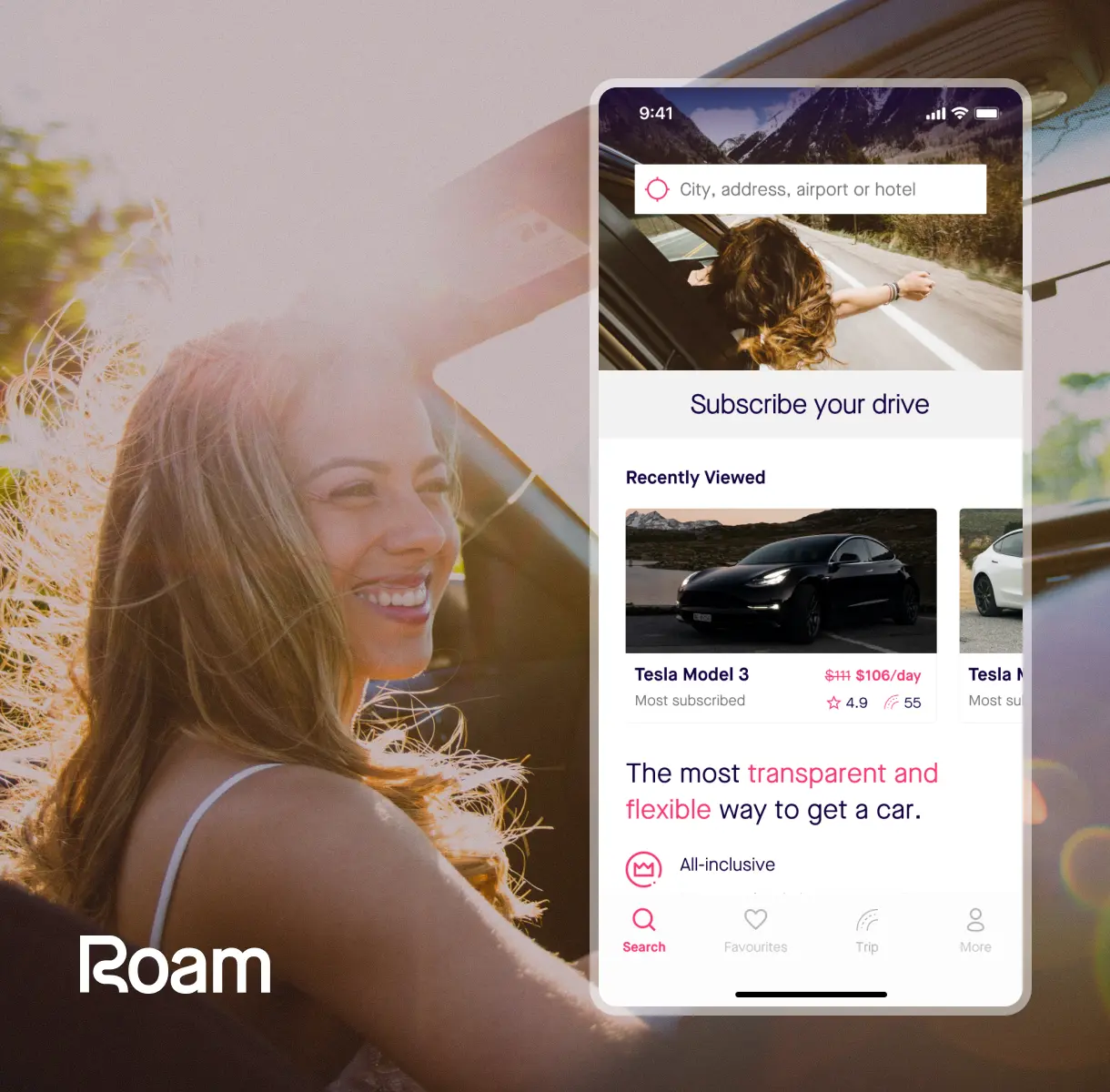

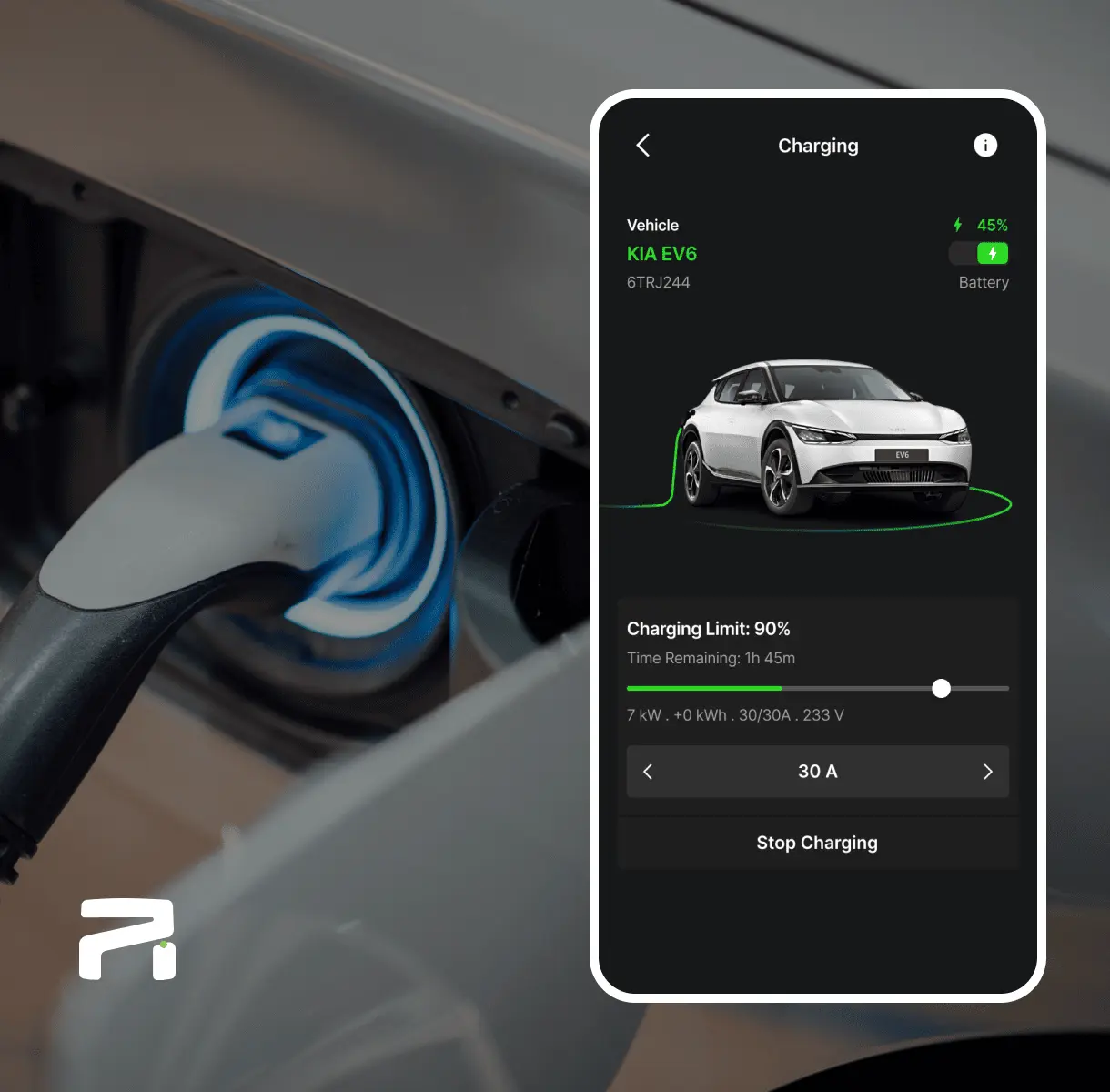

Our proud clients

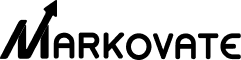

AI-MODELS

Rich Expertise Across Diverse AI Models

GPT-3

A powerful language model capable of generating human-like text.

Davinci

It is a variant of GPT-3 with enhanced performance and larger capacity.

Curie

A variant of GPT-3 optimized for generating creative and engaging text.

Babbage

This smaller variant of GPT-3 is suitable for apps with limited computational resources.

Ada

A variant of GPT-3 designed for generating conversational responses.

GPT-3.5

An improved version of GPT-3, offering enhanced language generation capabilities and performance.

GPT-4

The next iteration of the GPT series, expected to provide even more advanced language generation abilities and improved performance.

DALL.E

A unique AI model capable of generating original images from textual descriptions, allowing for creative image synthesis.

Whisper

An AI model designed to enhance automatic speech recognition (ASR) systems, improving their accuracy and efficiency.

Embeddings

AI models focused on transforming text or other data into numeric representations, enabling more effective processing and analysis.

Moderation

AI models developed to assist in content moderation tasks, helping identify and flag potentially inappropriate or harmful content.

Stable Diffusion

An AI model designed for image manipulation tasks, allowing for controlled and stable editing of images while preserving their overall appearance.

Midjourney

AI models developed for recommendation systems, providing personalized suggestions and guidance during a user’s journey or experience.

Bard

An AI model specialized in generating creative and coherent storytelling narratives, mimicking the style of human authors.

LLaMA

An AI model focused on language learning and mastery, assisting users in acquiring new languages or improving their linguistic skills.

Claude

A versatile AI model designed for visual understanding and perception tasks, enabling machines to interpret and analyze visual data effectively.

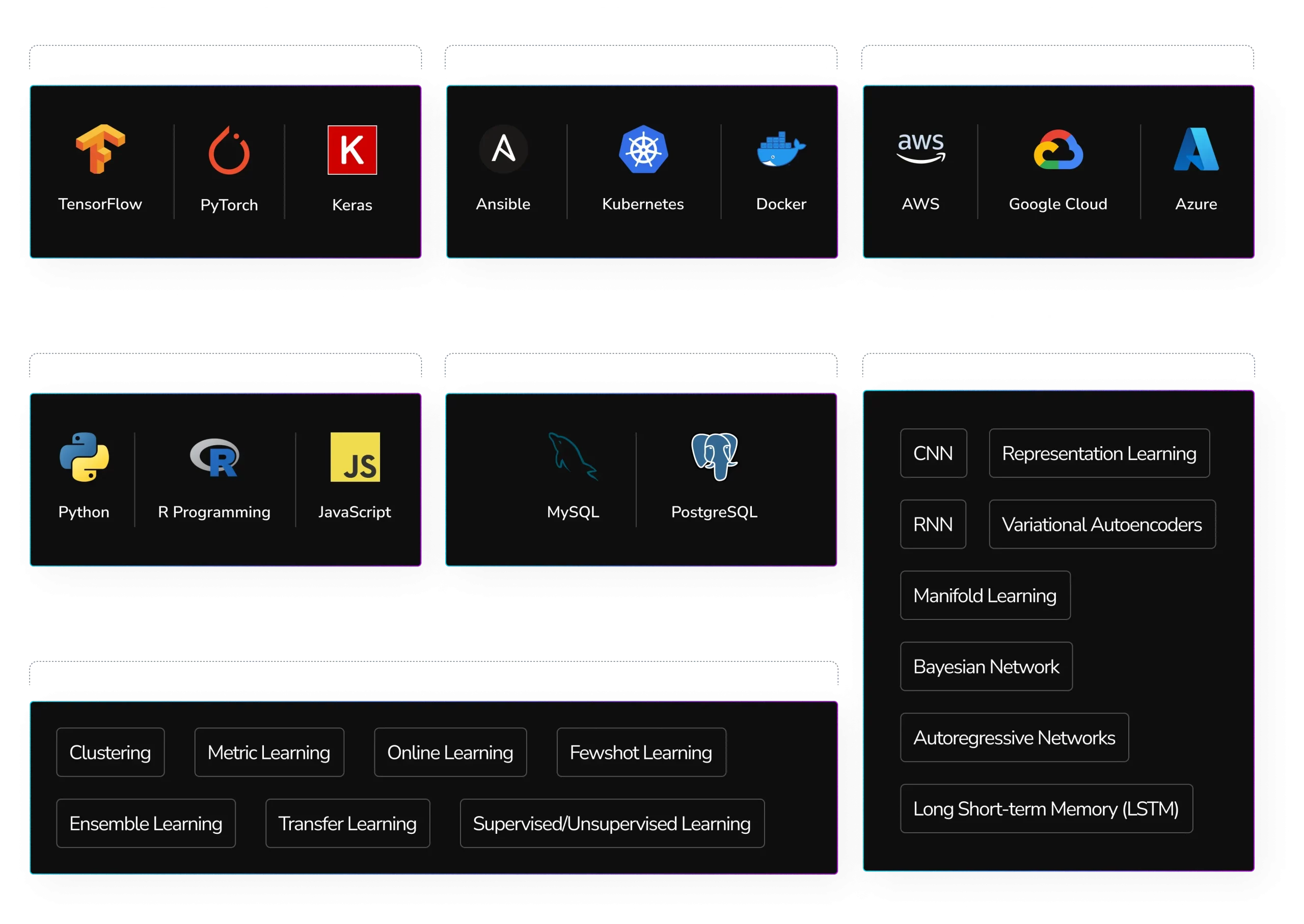

TOOL & TECHNOLOGY

Our Large Language Model Development Tech Stack

We Excel in LLM-powered Solutions with Expertise in Key Technologies

Machine Learning

Our developers create engaging bots that carry out standard, principles-based procedures via the user interface, simulating human contact with digital programs. Accord.Net, Keras, Apache, and several other technologies are part of our core stack.

NLP – Natural Learning

We develop Natural Language Processing (NLP) applications that assess structured and semistructured content, including search queries, mined web data, business data repositories, and audio sources, to identify emerging patterns, deliver operational insights, and do predictive analytics.

Deep Learning (DL) Development

We build ML-based DL technologies to build cognitive BI technology frameworks that recognize specific ideas throughout processing processes. We also delve through complex data to reveal various opportunities and achieve precise perfection using ongoing deep-learning algorithms.

Fine Tuning

Fine-tuning LLM models on a smaller dataset can tailor them to a specific task, which is commonly referred to as transfer learning. By doing so, computation and data requirements for training a top-notch model for a particular use case can be reduced.

Our Collaboration Partners

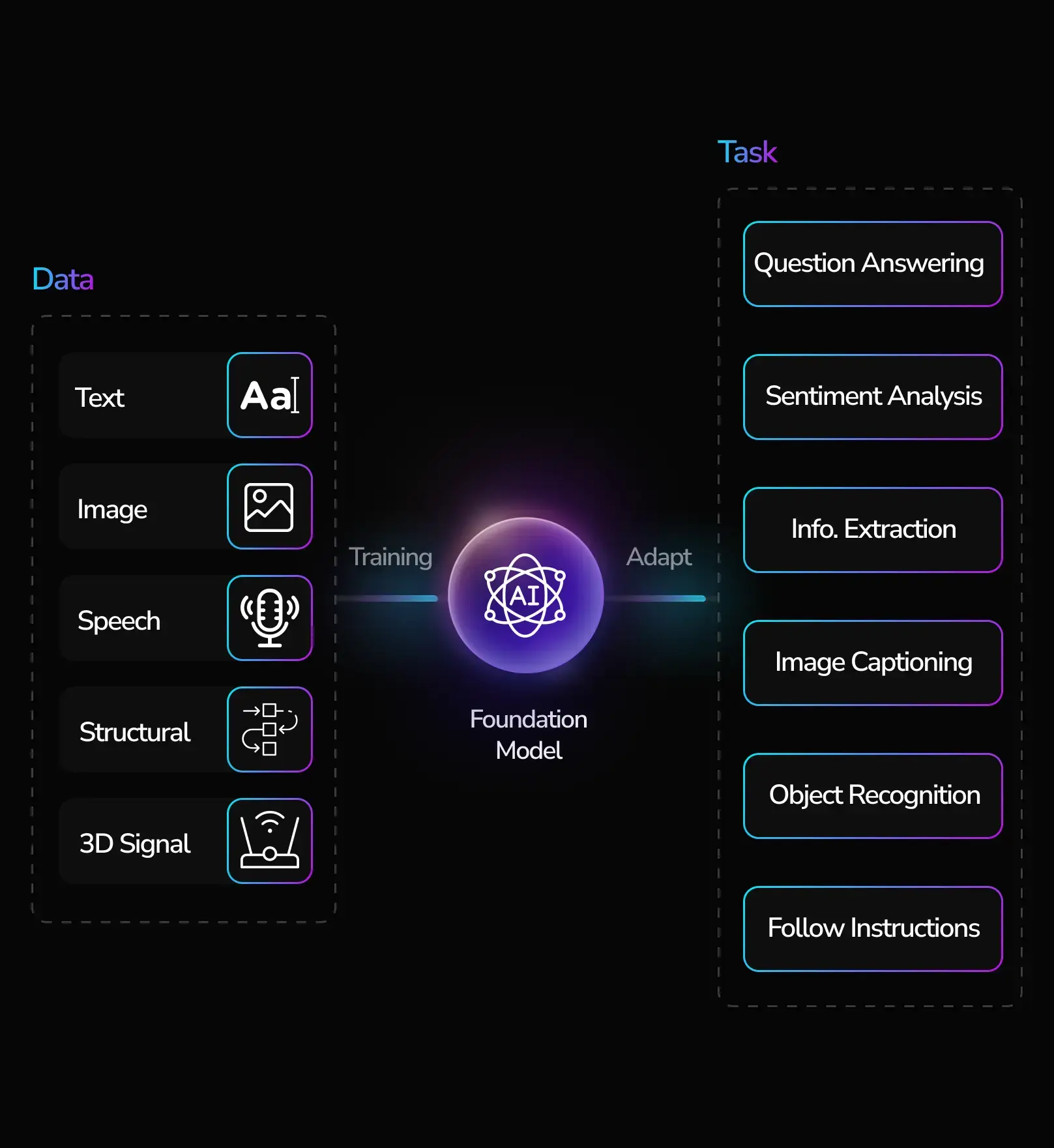

Healthcare

We harness the power of AI in healthcare to develop solutions that deliver precise diagnostics, tailored treatment plans, and efficient patient management. Our AI-driven technology accelerates drug discovery, offers predictive analytics for patient care, and streamlines administrative tasks, empowering healthcare providers to deliver the best possible care.

Fintech

We specialize in engineering AI-powered fintech solutions that transform the industry. Our chatbots offer seamless support, while machine learning algorithms ensure security and personalized financial advice. By analyzing spending habits, investment preferences, and risk tolerance, our AI algorithms offer tailored recommendations to help users make informed decisions.

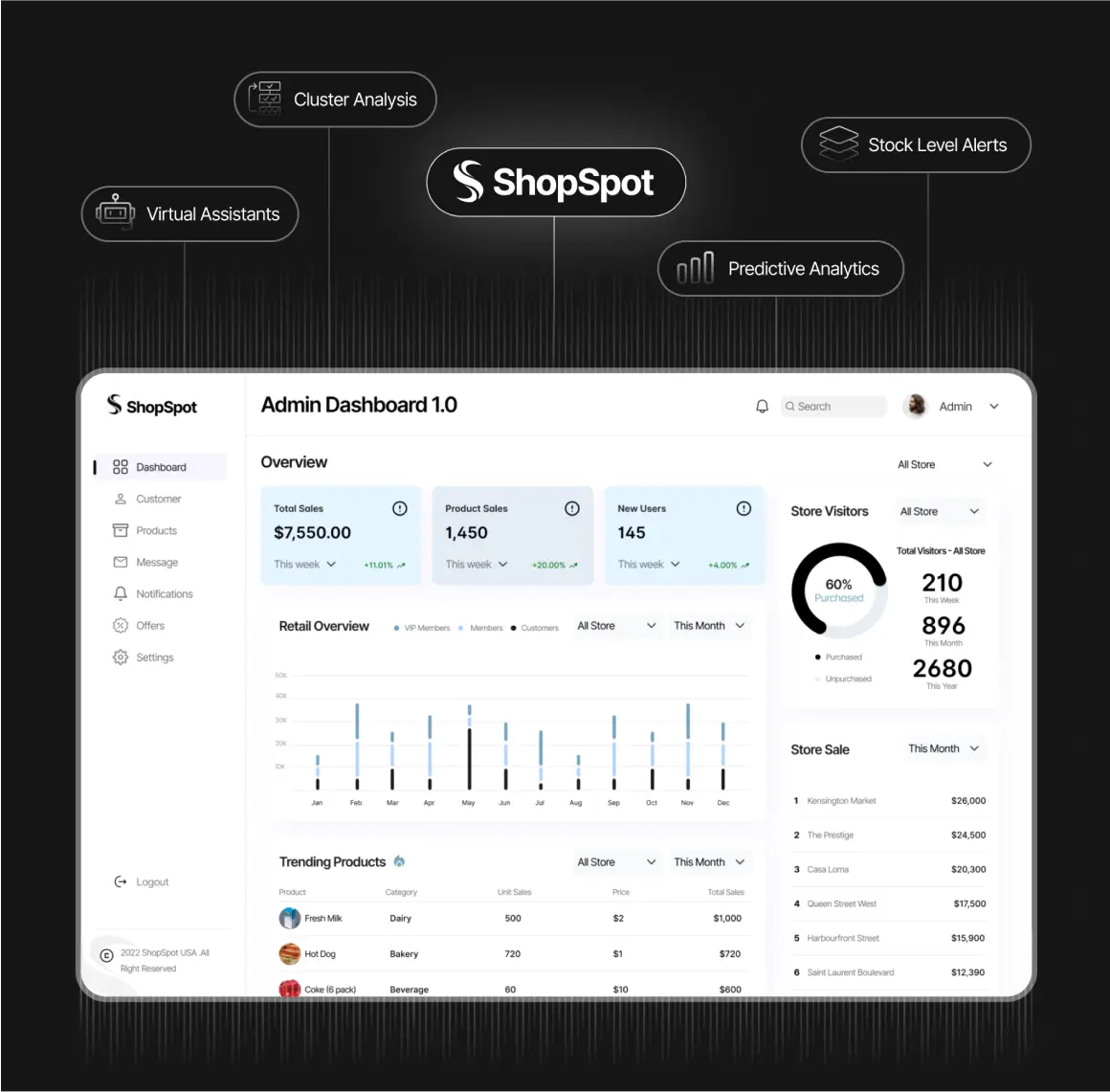

Retail

We develop solutions that redefine retail by harnessing AI for personalized shopping experiences, optimized inventory management, and predictive demand forecasting. Our AI-powered recommendations and virtual try-on experiences enhance customer engagement, transforming both online and offline retail operations.

INDUSTRIES

Developing smart solutions for every industry

Healthcare

We harness the power of AI in healthcare to develop solutions that deliver precise diagnostics, tailored treatment plans, and efficient patient management. Our AI-driven technology accelerates drug discovery, offers predictive analytics for patient care, and streamlines administrative tasks, empowering healthcare providers to deliver the best possible care.

“Markovate’s voice ordering system revolutionized our business operations. Seamlessly integrated with our POS system, it leverages NLP and cloud infrastructure to boost efficiency and offer an intuitive ordering experience. I highly recommend Markovate for businesses seeking to elevate their POS capabilities.” – Saskia Riverstone, Founder, SaaSure Solutions

Fintech

We specialize in engineering AI-powered fintech solutions that transform the industry. Our chatbots offer seamless support, while machine learning algorithms ensure security and personalized financial advice. By analyzing spending habits, investment preferences, and risk tolerance, our AI algorithms offer tailored recommendations to help users make informed decisions.

“Their solution transformed our insurance underwriting team. Leveraging MLOps and LLMOps expertise, they automated manual tasks, freeing time for strategic decision-making. Our underwriting capacity soared without sacrificing quality, setting new industry benchmarks.” – Gideon Marlowe, Product Manager, FinOptima Labs

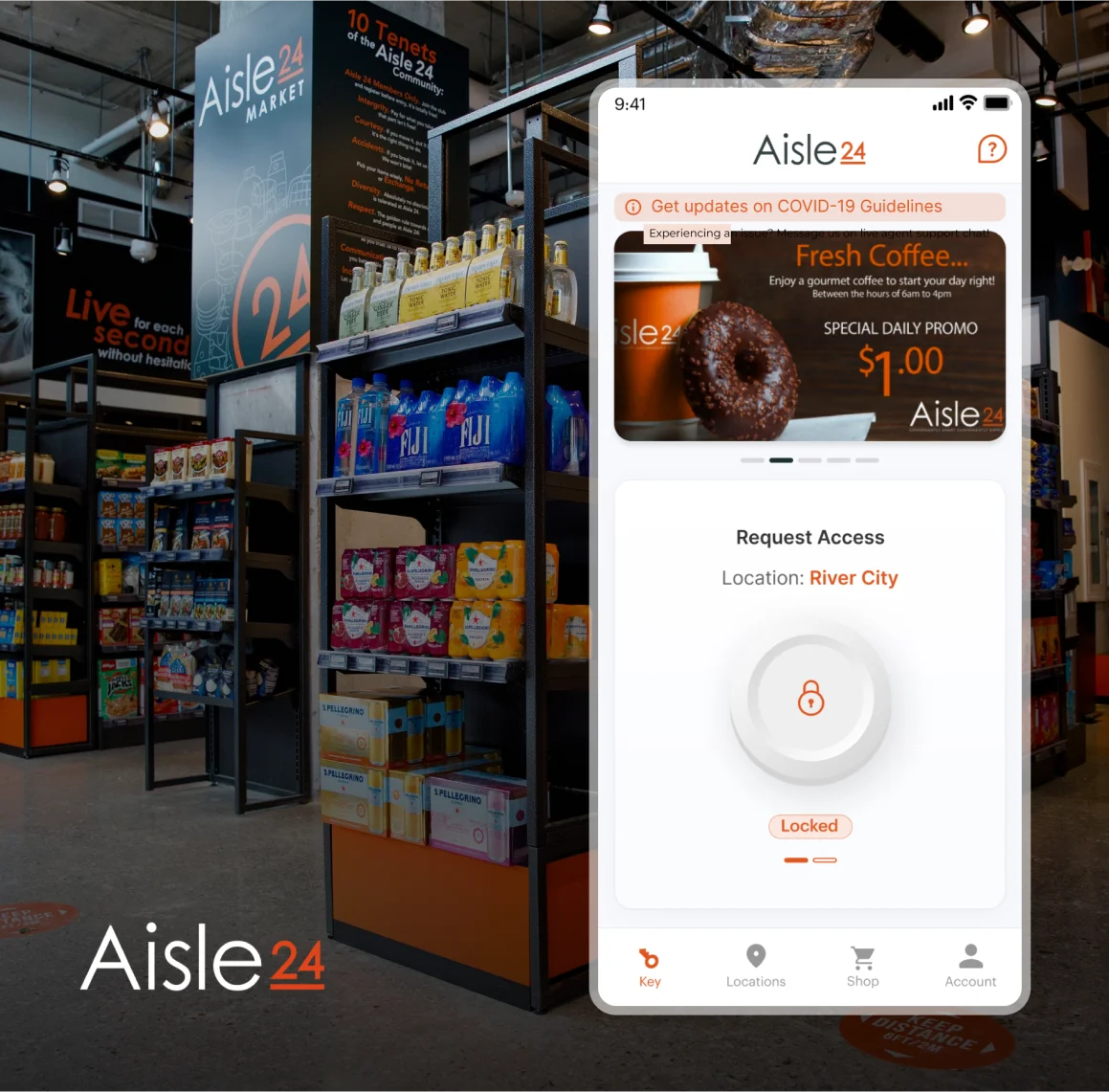

Retail

We develop solutions that redefine retail by harnessing AI for personalized shopping experiences, optimized inventory management, and predictive demand forecasting. Our AI-powered recommendations and virtual try-on experiences enhance customer engagement, transforming both online and offline retail operations.

“Markovate’s use of OpenAI Pinecone and LLama has transformed our supply chain logistics and sales predictions while streamlining marketing content creation. Their report summarization technology simplifies data analysis, and decision-making. They’ve been pivotal in our digital transformation.” – Sara Al Nahyan, CTO, PacProfs Inc.

SaaS

In the SaaS industry, AI revolutionizes user experience by tailoring interfaces through behavior analysis, automating customer service, and fortifying cybersecurity. Leverage scalable, intelligent AI solutions that redefine SaaS.

“Thanks to Markovate and their solution, our inspection accuracy has skyrocketed, significantly reducing our operational costs and improving customer satisfaction.” – Chris Cook, CEO, NVMS

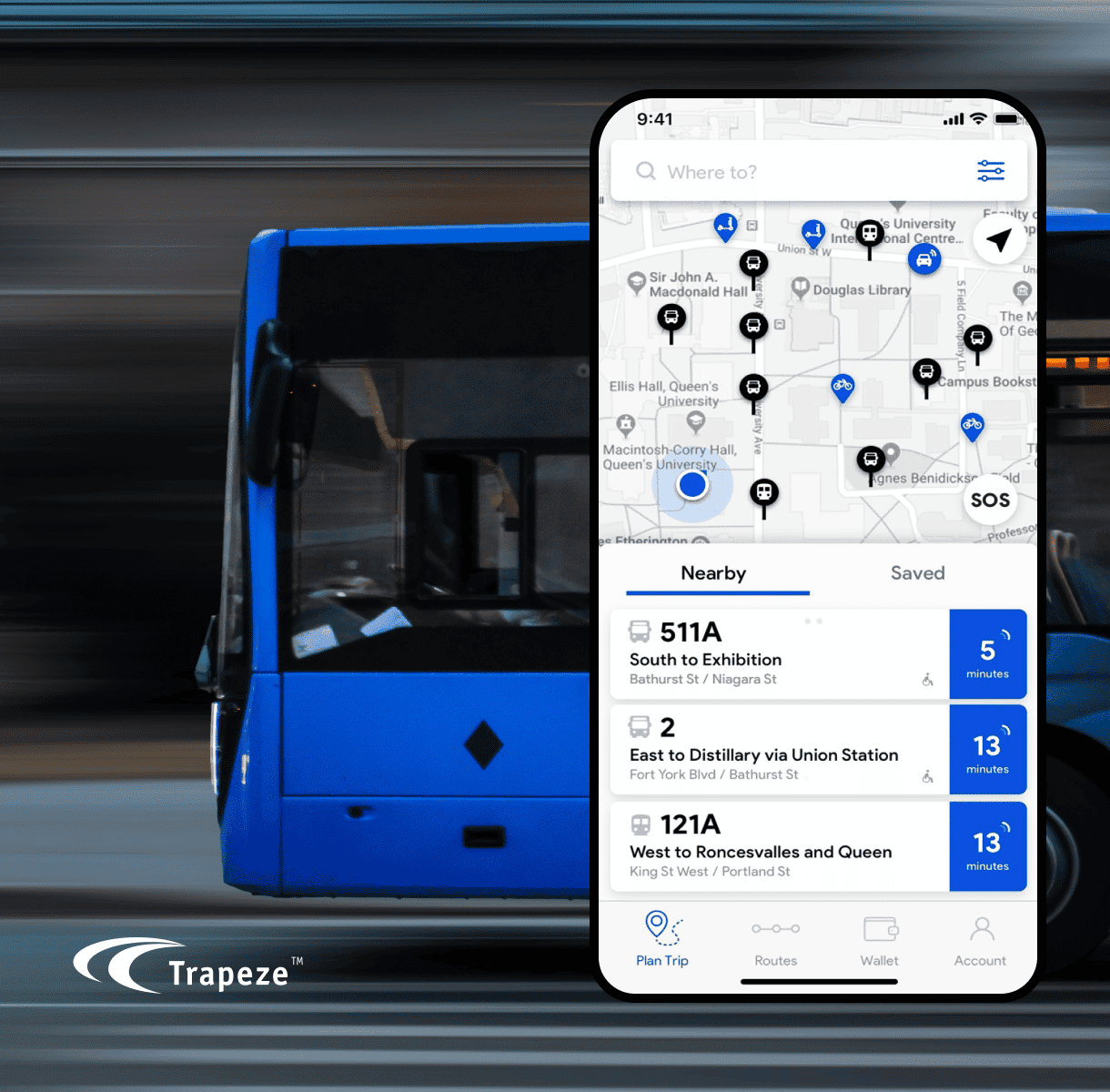

Travel

Elevate travel experiences with our AI-driven personalized recommendations, dynamic pricing strategies, and efficient booking systems. Our interactive chatbots enhance customer service, while predictive maintenance ensures fleet reliability.

“Markovate makes AI development clear and transparent. With active communication and optimal solutions, they alleviated our development concerns. Highly recommended for seamless product development partnerships.” – Garrett Vandendries, Director & PMO, Trapeze

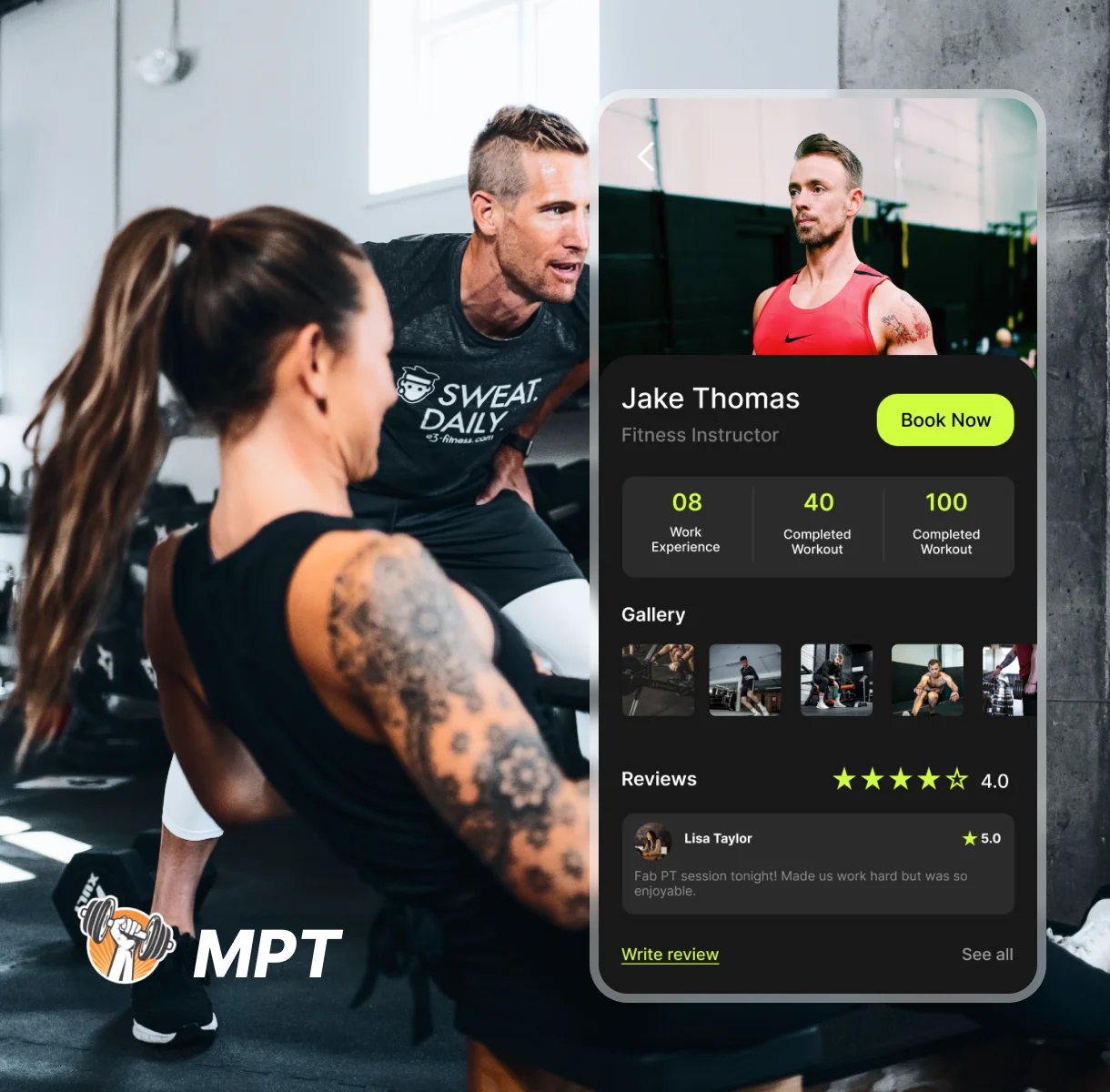

Fitness

Experience personalized workout and nutrition plans with our AI-driven data insights. Achieve fitness goals through virtual coaching and interactive apps, while our AI solutions streamline gym operations and client retention strategies.

“As a retail business, finding the right technology partner was crucial. We partnered with Markovate, who expertly guided our product development from concept to launch. Their recommended innovative solutions have significantly enhanced our operations and reduced our costs.” – John D, CEO, Aisle 24

FAQ’s

About LLM development

What is LLM or Large Language Models?

What are the types of Large Language Models?

What is the timeline to develop an LLM?

For example, developing a small LLM for a simple task, such as sentiment analysis on a small dataset, may only take a few days to a week. However, developing a large-scale LLM model for a complex task, such as natural language translation, may require a team of researchers several months or even years to develop, train, and optimize.

It’s worth noting that the development of a LLM model is an iterative process, involving multiple rounds of training, validation, and fine-tuning. Therefore, the timeline may vary depending on the results of each iteration and the level of accuracy needed for the task at hand.