Artificial Intelligence has transitioned from a luxury to a necessity in today’s competitive landscape. A deep understanding of the AI tech stack is beneficial and crucial for crafting cutting-edge products capable of revolutionizing business operations. Let’s explore in-depth to understand everything about the AI tech stack.

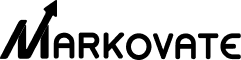

AI Tech Stack Layers

The AI stack is a structural framework comprising interdependent layers, each serving a critical function to ensure the system’s efficiency and effectiveness. Unlike a monolithic architecture, where each component is tightly coupled and entangled, the AI stack’s layered approach allows for modularity, scalability, and easy troubleshooting. This architecture comprises critical components such as data ingestion, data storage, data processing, machine learning algorithms, APIs, and user interfaces. These layers act as the foundational pillars that support the intricate web of algorithms, data pipelines, and application interfaces in a typical AI system. Let’s understand these layers in depth.

1. Application Layer

The Application Layer is the manifestation of user experience, encapsulating elements from web applications to REST APIs that manage data flow between client-side and server-side environments. Additionally, this layer handles essential operations. It captures inputs via GUIs, renders visualizations on dashboards, and provides data-driven insights through API endpoints. Technologies like React for frontend and Django for backend are often employed, each chosen for their specific advantages in tasks such as data validation, user authentication, and API request routing. The Application Layer serves as a gateway that routes user requests to underlying machine learning models while also maintaining stringent security protocols to protect data integrity.

2. Model Layer

Transitioning to the Model Layer, this is the engine room of decision-making and data processing. Specialized libraries such as TensorFlow or PyTorch take the helm here, offering a versatile toolkit for a range of machine learning activities including but not limited to natural language understanding, computer vision, and predictive analytics. Feature engineering, model training, and hyperparameter tuning occur in this domain. Different machine learning algorithms—from regression models to complex neural networks—are scrutinized for performance metrics such as precision, recall, and F1-score. This layer acts as an intermediary, pulling in data from the Application Layer, performing computation-intensive tasks, and then pushing the insights back to be displayed or acted upon.

3. Infrastructure Layer

The Infrastructure Layer is fundamental for both model training and inference. This layer is where computational resources such as CPUs, GPUs, and TPUs are allocated and managed. Scalability, latency, and fault tolerance are engineered at this level using orchestration tools like Kubernetes for container management. On the cloud side, services like AWS’s EC2 instances or Azure’s AI-specific accelerators can be incorporated to provide the heavy lifting in terms of computation. This infrastructure is not merely a passive recipient of requests but a dynamic entity programmed to allocate resources judiciously. Load balancing, data storage solutions, and network latency are all engineered to meet the specific needs of the above layers, ensuring that bottlenecks in computational power do not become a stumbling block for the application’s performance.

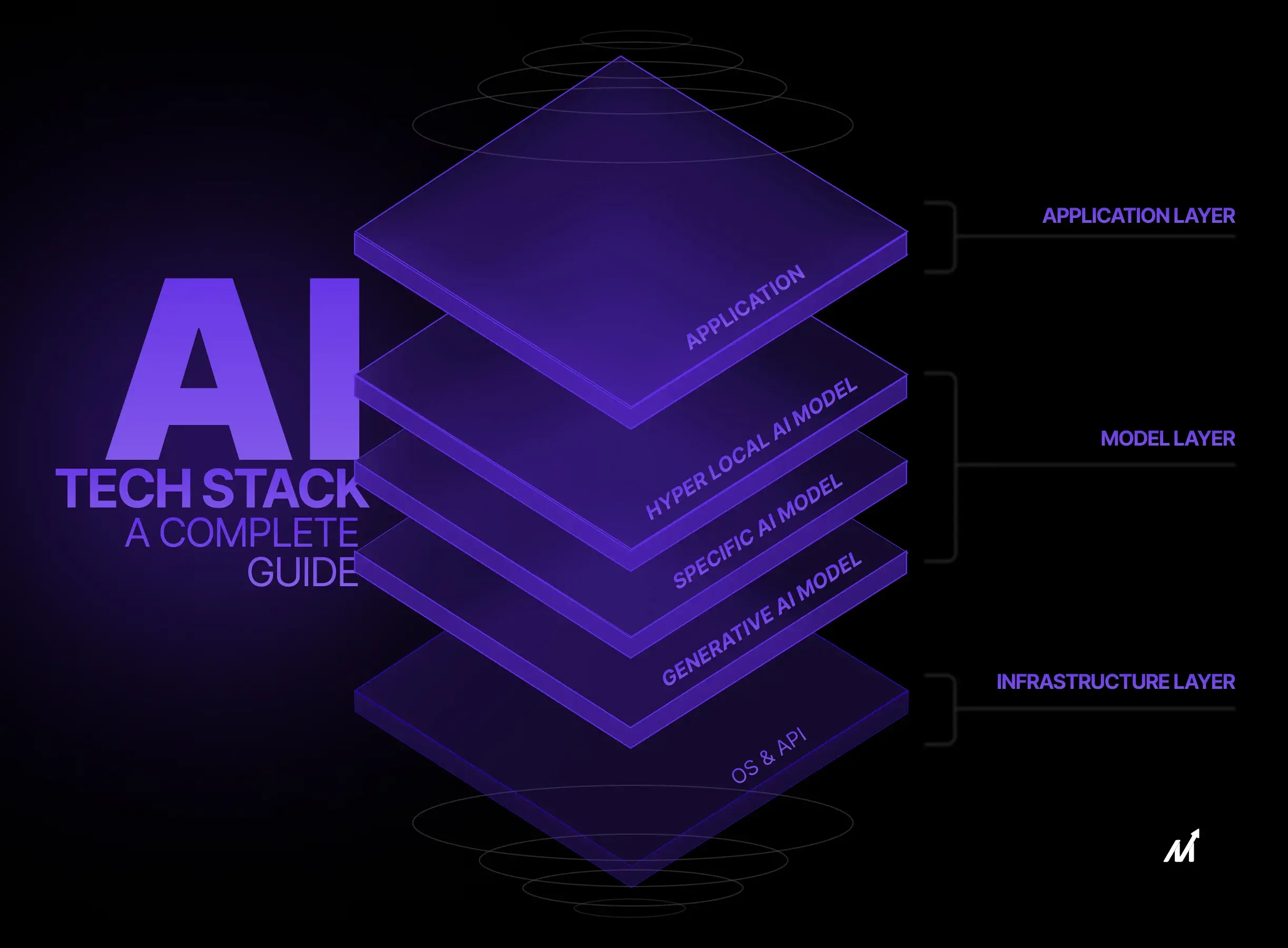

AI Tech Stack: Components & Their Relevance

The architecture encompassing artificial intelligence (AI) solutions entails multifaceted modules, each specialized in discrete tasks but cohesively integrated for comprehensive functionality. The sophistication of this stack of technologies is instrumental in driving AI’s capabilities from data ingestion to final application. Below, are the various components that make up the AI tech stack:

1. Data Storage and Organization

Before any AI processing occurs, the foundational step is the secure and efficient storage of data. Storage solutions such as SQL databases for structured data and NoSQL databases for unstructured data are essential. For large-scale data, Big Data solutions like Hadoop’s HDFS and Spark’s in-memory processing become necessary. The type of storage selected directly impacts the data retrieval speed, which is crucial for real-time analytics and machine learning pipelines.

2. Data Preprocessing and Feature Identification: The Bridge to Machine Learning

Following storage is the meticulous task of data preprocessing and feature identification. Preprocessing involves normalization, handling missing values, and outlier detection—these steps are performed using libraries such as Scikit-learn and Pandas in Python. Feature identification is pivotal for dimensionality reduction and is executed using techniques like Principal Component Analysis (PCA) or Feature Importance Ranking. These cleansed and reduced features become the input for machine learning algorithms, ensuring higher accuracy and efficiency.

3. Supervised and Unsupervised Algorithms: The Core of Data Modeling

Once preprocessed data is available, machine learning algorithms are employed. Algorithms like Support Vector Machines (SVMs) for classification, Random Forest for ensemble learning, and k-means for clustering serve specific roles in data modeling. The choice of algorithm directly impacts computational efficiency and predictive accuracy, and therefore, must be in line with the problem’s requirements.

4. Transition to Deep Learning: Enhanced Computational Modeling

As computational problems grow in complexity, traditional machine-learning algorithms may fall short. This necessitates the use of deep learning frameworks such as TensorFlow, PyTorch, or Keras. These frameworks support the construction and training of complex neural network architectures like Convolutional Neural Networks (CNNs) for image recognition or Recurrent Neural Networks (RNNs) for sequential data analysis.

5. Natural Language Understanding and Sentiment Analysis: Deciphering Human Context

When it comes to interpreting human language, Natural Language Processing (NLP) libraries like NLTK and spaCy serve as the foundational layer. For more advanced applications like sentiment analysis, transformer-based models like GPT-4 or BERT offer higher levels of understanding and context recognition. These NLP tools and models are usually integrated into the AI stack following deep learning components for applications requiring natural language interaction.

6. Visual Data Interpretation and Recognition: Making Sense of the World

In the realm of visual data, computer vision technologies such as OpenCV are indispensable. Advanced applications may leverage CNNs for facial recognition, object detection, and more. These computer vision components often work in tandem with machine learning algorithms to enable multi-modal data interpretation.

7. Robotics and Autonomous Systems: Real-world Applications

For physical applications like robotics and autonomous systems, sensor fusion techniques are integrated into the stack. In addition, algorithms for Simultaneous Localization and Mapping (SLAM) and decision-making algorithms like Monte Carlo Tree Search (MCTS) are implemented. These elements function alongside the machine learning and computer vision components, driving the AI’s capability to interact with its environment.

8. Cloud and Scalable Infrastructure: The Bedrock of AI Systems

The entire AI tech stack often operates within a cloud-based infrastructure like AWS, Google Cloud, or Azure. Therefore, these platforms provide scalable, on-demand computational resources that are vital for data storage, processing speed, and algorithmic execution. The cloud infrastructure acts as the enabling layer that allows for the seamless and integrated operation of all the above components.

AI Ecosystem Foundations: A Blueprint for Intelligent Application Development

A well-architected AI tech stack fundamentally comprises multifaceted application frameworks that offer an optimized programming paradigm, readily adaptable to emerging technological evolutions. For instance, frameworks like LangChain, Fixie, Microsoft’s Semantic Kernel, and Vertex AI by Google Cloud, equip engineers to build applications equipped for autonomous content creation, semantic comprehension for natural language search queries, and task execution through intelligent agents.

1. Computational Intelligence Modules: The Cognitive Layer

Situated at the heart of the AI tech stack are the Foundation Models (FMs), essentially serving as the cognitive layer that enables complex decision-making and logical reasoning. Whether it’s in-house creations from enterprises like Open AI, Anthropic, or Cohere or open-source alternatives, these models offer a vast spectrum of capabilities. Engineers can leverage multiple FMs to enhance application performance. Deployment options include both centralized server architectures and edge computing, offering benefits such as reduced latency and improved security.

2. Data Operations: Fueling the Cognitive Engines

Language Learning Models (LLMs) possess the capability to make inferences based on their training data. To maximize efficacy and precision, engineers must establish robust data operationalization protocols. Data loaders, vector databases, and similar tools become instrumental in ingesting both structured and unstructured datasets, facilitating efficient storage and query execution. Moreover, technologies like retrieval-augmented generation add another layer of customization to the model outputs.

2. Performance Assessment Mechanisms: Quantitative and Qualitative Metrics

Navigating the trade-offs among model efficiency, fiscal expenditure, and response latency is a significant hurdle in the realm of generative AI. Engineers employ an array of diagnostic utilities to fine-tune this balance, including prompt optimization, real-time performance analytics, and experimental tracking. For these purposes, a myriad of No Code/Low Code tools, tracking utilities, and specialized platforms such as WhyLabs’ LangKit are at the developers’ disposal.

3. Production Transitions: The Final Deployment Stage

The ultimate goal in the developmental pipeline is to transition applications from experimental stages to live production environments. Engineers have the flexibility to opt for self-hosting or may enlist third-party deployment services. Moreover, various facilitators like Fixie streamline the construction, dissemination, and implementation phases of AI application deployment.

In summary, the AI tech stack is an all-encompassing infrastructure, supporting the life cycle of intelligent application creation from conceptualization to live production. This intricate system provides the technical scaffolding that revolutionizes how applications are built, how information synthesis occurs, and how task execution is accomplished. It embodies a transformative methodology that redefines both the technological landscape and the operational modalities of contemporary work environments. If you want to learn how you can leverage the AI tech stack for your business solutions, refer to AI consulting services.

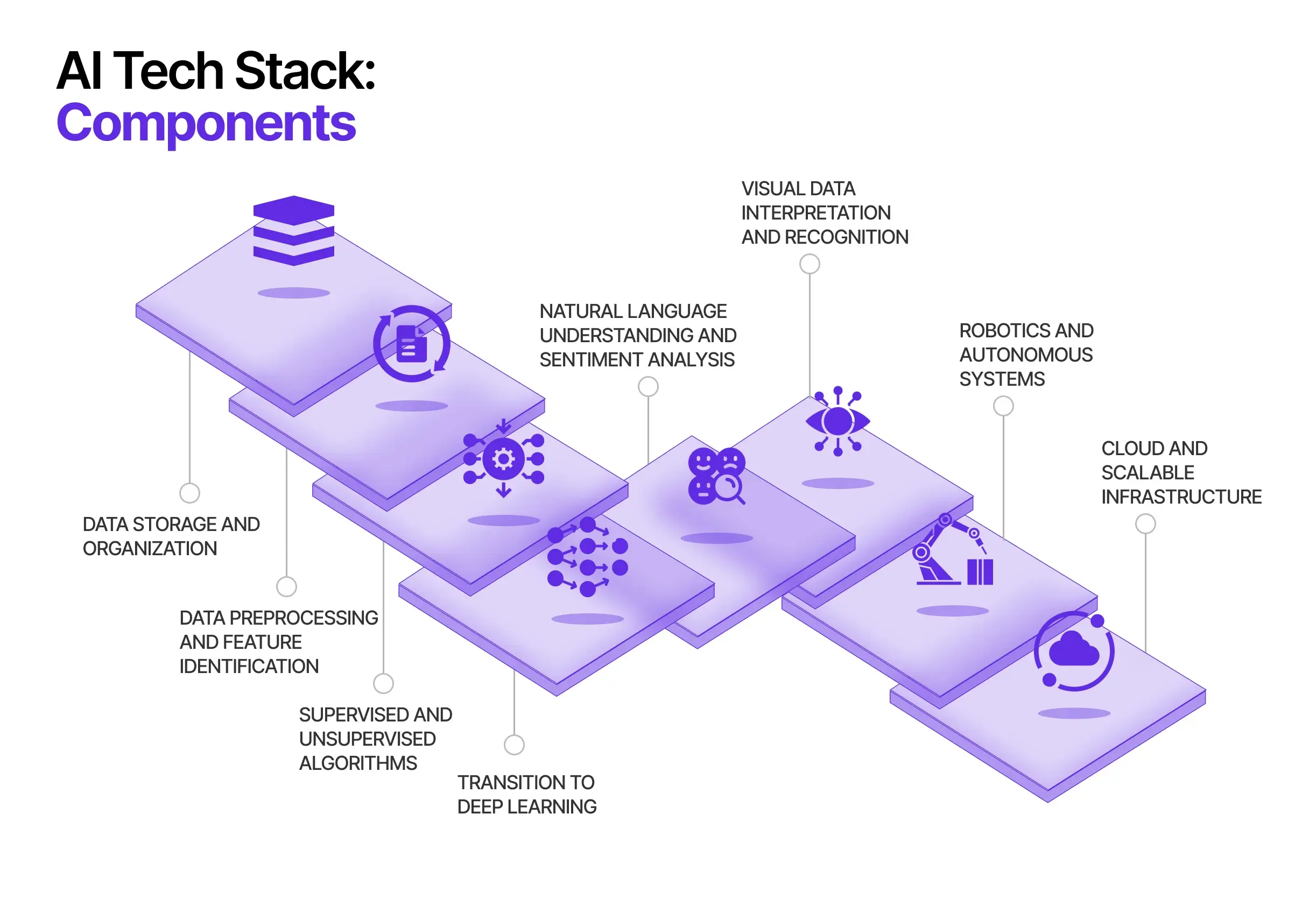

AI Tech Stack – The Necessity for AI Success

The necessity of a meticulously curated technology stack in constructing robust AI systems cannot be overstated. Therefore, from machine learning frameworks to programming languages, cloud services, and data manipulation utilities, each element plays a pivotal role. Below is a technical breakdown of these critical constituents.

1. Machine Learning Frameworks: Core of the AI Tech Stack

The architecture of AI models necessitates advanced machine learning frameworks for training and inference. TensorFlow, PyTorch, and Keras are not mere libraries; they’re ecosystems that offer an arsenal of tools and Application Programming Interfaces (APIs) to build, optimize, and validate machine learning models. They also host a spectrum of pre-configured models for tasks ranging from natural language processing to computer vision. Such frameworks must form the bedrock of the technology stack, offering avenues to adapt models for specific metrics like precision, recall, or F1 score.

2. Programming Languages: Foundation of the AI Tech Stack

The choice of programming language is consequential to the harmonious interplay between user accessibility and model efficiency. Python stands as the lingua franca of machine learning, owing to its readability and exhaustive package repositories. While Python predominates, languages such as R and Julia also find applications, particularly for statistical analysis and high-performance computing tasks, respectively.

3. Cloud Resources: Essential Infrastructure for the AI Tech Stack

The computational and storage demands of generative AI models are far from trivial. The integration of cloud services in the technology stack furnishes these models with the requisite horsepower. Services such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure render configurable resources like virtual machines, along with dedicated machine learning platforms. The cloud infrastructure’s innate scalability ensures that the AI systems can adapt to varying workloads without sacrificing performance or incurring downtime.

4. Data Manipulation Utilities: Vital Tools in the AI Tech Stack

Raw data is seldom ready for immediate model training; preprocessing steps like normalization, encoding, and imputation are often imperative. In addition, utilities like Apache Spark and Apache Hadoop offer data processing capabilities that can manage voluminous datasets with efficiency. Additionally, their added functionality for data visualization aids in exploratory data analysis, facilitating the uncovering of hidden trends or anomalies within the data.

By methodically selecting and integrating these components into a cohesive technology stack, one achieves not just a functional AI system but an optimized one. The system thus assembled will exhibit enhanced accuracy, greater scalability, and reliability, which are indispensable for the rapid evolution and deployment of AI applications. It is the blend of these well-chosen resources that makes a technology stack not just comprehensive but also instrumental in achieving the highest echelons of performance in AI systems.

(Read more about how to build an AI system)

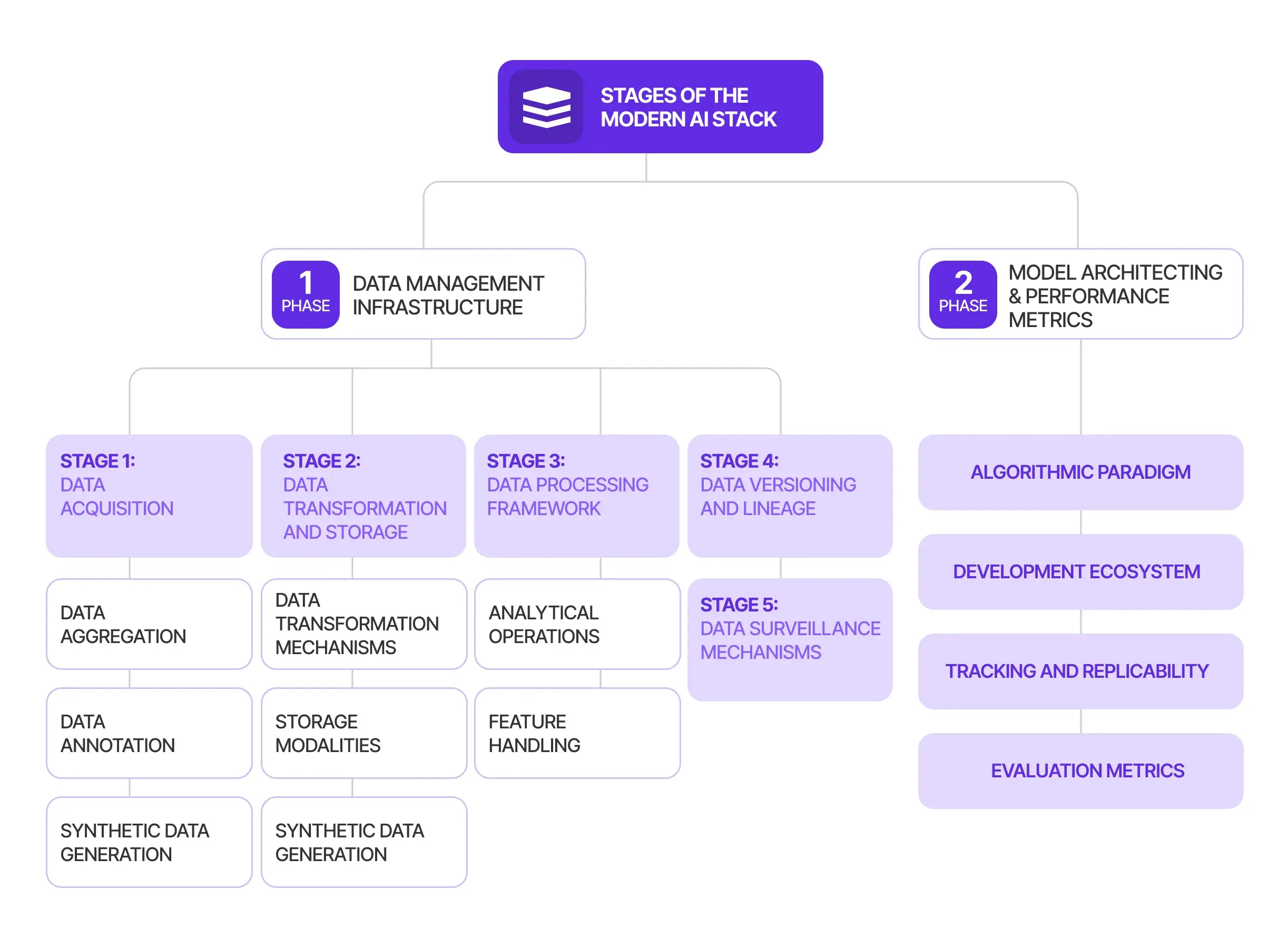

Stages of the Modern AI Tech Stack

To effectively build, deploy, and scale AI solutions, a systematic framework is indispensable. Consequently, serving as the backbone for AI applications, offering a layered approach to tackling the multifaceted challenges posed by AI development. This framework is usually divided into distinct phases, each responsible for a particular aspect of the AI life cycle, such as data management, data transformation, and machine learning, among others. Let’s understand each of these phases to understand each layer’s importance, tools, and methodologies.

Phase One: Data Management Infrastructure

Data is the lifeblood that fuels machine learning algorithms, analytics, and ultimately, decision-making. Thus, the first phase of our AI Stack discussion centers around Data Management Infrastructure, a segment vital for obtaining, refining, and making data actionable. This phase can be subdivided into several stages, each focusing on a specific aspect of data handling, including Data Acquisition, Data Transformation and Storage, and Data Processing Frameworks. Subsequently, we dissect each stage to provide a comprehensive understanding of its mechanisms, tools, and significance.

Stage 1. Data Acquisition

1.1 Data Aggregation

The data acquisition mechanism is a complex interplay involving an amalgamation of internal toolsets and external applications. These disparate sources coalesce to curate an actionable data set for further operations.

1.2 Data Annotation

The data harvested is subjected to a labeling process, which is essential for machine-based learning in a supervised environment. Consequently, automation has incrementally taken over this laborious task through software solutions like V7 Labs and ImgLab. Nevertheless, manual scrutiny remains indispensable due to algorithmic limitations in identifying outlier cases.

1.3 Synthetic Data Generation

Notwithstanding the vast volumes of available data, gaps exist, particularly in niche use cases. Therefore, tools like Tensorflow and OpenCV have been employed to create synthetic image data. Libraries like SymPy and Pydbgen are deployed in symbolic expressions and categorical data. Additional utilities like Hazy and Datomize provide integration with other platforms.

Stage 2. Data Transformation and Storage

2.1 Data Transformation Mechanisms

ETL and ELT serve as two contrasting paradigms for data transformation. ETL prioritizes data refinement, temporarily staging the data for processing before final storage. In contrast, ELT focuses on practicality, depositing data first and transforming it. Reverse ETL has emerged to sync data stores with end-user interfaces like CRMs and ERPs, democratizing data across applications.

2.2 Storage Modalities

Various methodologies exist for data storage, each fulfilling a specific need. Data lakes excel at storing unstructured data, whereas data warehouses are tailored for storing highly processed, structured data. An array of cloud-based solutions like Google Cloud Platform and Azure Cloud furnish robust storage capabilities.

Stage 3. Data Processing Framework

3.1 Analytical Operations

The data, post-acquisition, must be processed into a digestible form. Libraries like NumPy and pandas are widely utilized for this data analysis phase. In addition, for high-velocity data handling, Apache Spark serves as a potent tool.

3.2 Feature Handling

Feature stores like Iguazio, Tecton, and Feast are the cornerstones of efficient feature management, significantly enhancing the reliability of feature pipelines across machine-learning applications.

Stage 4. Data Versioning and Lineage

Versioning remains a cornerstone in data management, facilitating reproducibility in an ever-changing data environment. Additionally, tools like DVC are technology-agnostic, integrating seamlessly with various storage mediums. On the lineage front, platforms like Pachyderm offer data versioning and provide an intricate mapping of data lineage, presenting a coherent data narrative.

Stage 5. Data Surveillance Mechanisms

Automated monitoring solutions like Censius are instrumental in maintaining data quality identifying discrepancies such as missing values, type conflicts, or statistical variations. Supplementary monitoring tools like Fiddler and Grafana serve similar purposes but add a layer of Complexity by tracking data traffic volumes.

Phase 2: Model Architecting and Performance Metrics

Modeling in the landscape of artificial intelligence and machine learning isn’t a linear process but rather a cyclical one involving iterative advancements and evaluations. It begins after the data has been amassed, appropriately stored, scrutinized, and transmuted into functional attributes. Model development is not just about algorithmic choices but should is the lens of computational constraints, operational conditions, and data security governance.

2.1 Algorithmic Paradigm

Machine learning comes with extensive libraries like TensorFlow, PyTorch, scikit-learn, and MXNET, each offering unique selling points—computational speed, flexibility, ease of learning curve, or robust community backing. Once a library aligns with project requirements, one can start the routine procedures of model selection, parameter tuning, and iterative experimentation.

2.2 Development Ecosystem

The Integrated Development Environment (IDE) scaffolds AI and software development. Through integration of essential components—code editors, compilation mechanisms, debugging tools, and more—streamlining the development workflow. PyCharm stands out for its ease in dependency management and code linkage, ensuring the project remains stable, even when transitioning between different developers or teams.

Visual Studio Code (VS Code) emerges as another reliable IDE, highly versatile across operating systems and offering integrations with external utilities like PyLint and Node.js. Other IDEs like Jupyter and Spyder are predominantly utilized during the prototyping phase. Traditionally an academic favorite, MATLAB is gradually gaining ground in commercial applications for end-to-end code support.

2.3 Tracking and Replicability

The machine learning technology stack is inherently experimental, demanding numerous tests across data subsets, feature engineering, and resource allocation to fine-tune the optimum model. Furthermore, the capacity to replicate experiments is crucial for retracing the developmental trajectory and production deployment.

Tools like MLFlow, Neptune, and Weights & Biases facilitate rigorous experiment tracking. At the same time, Layer offers an overarching platform for managing all project metadata. This ensures a collaborative ecosystem that can dynamically adapt to scale, a significant focus for companies that aim for robust, collaborative machine learning ventures.

2.4 Evaluation Metrics

Performance assessment in machine learning involves intricate comparisons across multiple experimental outcomes and data divisions. Automated tools such as Comet, Evidently AI, and Censius play a vital role here. These utilities automate monitoring, allowing data scientists to focus on core objectives rather than manual performance tracking.

These platforms provide standard and customizable metric evaluations tailored to generic and nuanced use cases. Detailing performance issues about other challenges, such as data quality degradation or model drifts, becomes indispensable for root cause analysis.

Criteria for Selecting an AI Tech Stack

1. Technical Specifications and Functionality

Determining the technical specifications and functionalities of a project plays an instrumental role in the choice of an AI technology stack. Moreover, the magnitude and ambition of the project necessitated a corresponding complexity in the stack. This complexity ranges from the programming languages to the frameworks utilized. Concerning generative AI, the following are the core parameters that need evaluation:

- Data Modality: Whether the AI system will generate images, text, or audio dictates the algorithmic approach—GANs for visual elements and RNNs or LSTMs for textual or auditory data.

- Computational Complexity: Factors like the number of inputs, neural layers, and data volume necessitate a robust hardware architecture, potentially demanding GPUs and frameworks like TensorFlow or PyTorch.

- Scalability Demands: In cases that require immense computational elasticity—such as generating data variations or accommodating numerous users—cloud-based infrastructures like AWS, Google Cloud Platform, or Azure become imperative.

- Precision Metrics: For critical applications like drug development or autonomous navigation, highly accurate generative techniques like VAEs or RNNs are given priority.

- Execution Speed: For real-time applications, like video streaming or conversational agents, optimization strategies to accelerate model inference are crucial.

2. Competency and Assets

The skills and resources available to the development team are pivotal in the choice of an AI stack. Additionally, the decision-making should be strategic, eliminating any steep learning curves that might impede progress. Considerations in this domain include:

- Team Expertise: Align the stack with the team’s proficiencies in specific languages or frameworks to accelerate the development timeline.

- Hardware Resources: If GPUs or other specialized hardware are accessible, consider more advanced computational frameworks.

- Support Ecosystem: Ensure that the chosen technology stack has comprehensive documentation, tutorials, and a community to assist in overcoming bottlenecks.

- Fiscal Constraints: Budget limitations may necessitate a cost-effective yet competent technology stack.

- Maintenance Complexity: Post-deployment updates and support should be straightforward, and facilitated by reliable community or vendor support.

3. System Scalability

A system’s scalability directly impacts its longevity and adaptability. Besides, an optimal stack should account for vertical scalability—enhanced performance across multiple devices—and horizontal scalability—ease of feature augmentation. Important variables to consider include:

- Data Volume: Large datasets may necessitate distributed computing frameworks like Apache Spark for efficient manipulation.

- User Traffic: High user concurrency calls for architectures that can manage substantial request loads, which may require cloud-based or microservices designs.

- Real-Time Processing: Instantaneous data processing requirements should guide the selection towards performance-optimized or lightweight models.

- Batch Operations: Systems requiring high-throughput batch operations may benefit from distributed computing frameworks for efficient data processing.

4. Information Security and Compliance

A secure data environment is paramount, particularly when handling sensitive or financial information. Essential security criteria to consider include:

- Data Integrity: Choose stacks offering robust encryption, role-based access, and data masking to safeguard against unauthorized data manipulation.

- Model Security: The AI models constitute valuable intellectual assets, demanding features that prevent unauthorized access or tampering.

- Infrastructure Defense: Firewalls, intrusion detection systems, and other cybersecurity tools are necessary to fortify the operational infrastructure.

- Regulatory Adherence: Depending on the sector—such as healthcare or finance—the stack must be compliant with industry-specific regulations like HIPAA or PCI-DSS.

- Authentication Mechanisms: Strong user authentication and authorization protocols ensure that only authorized personnel can interface with the system and its data.

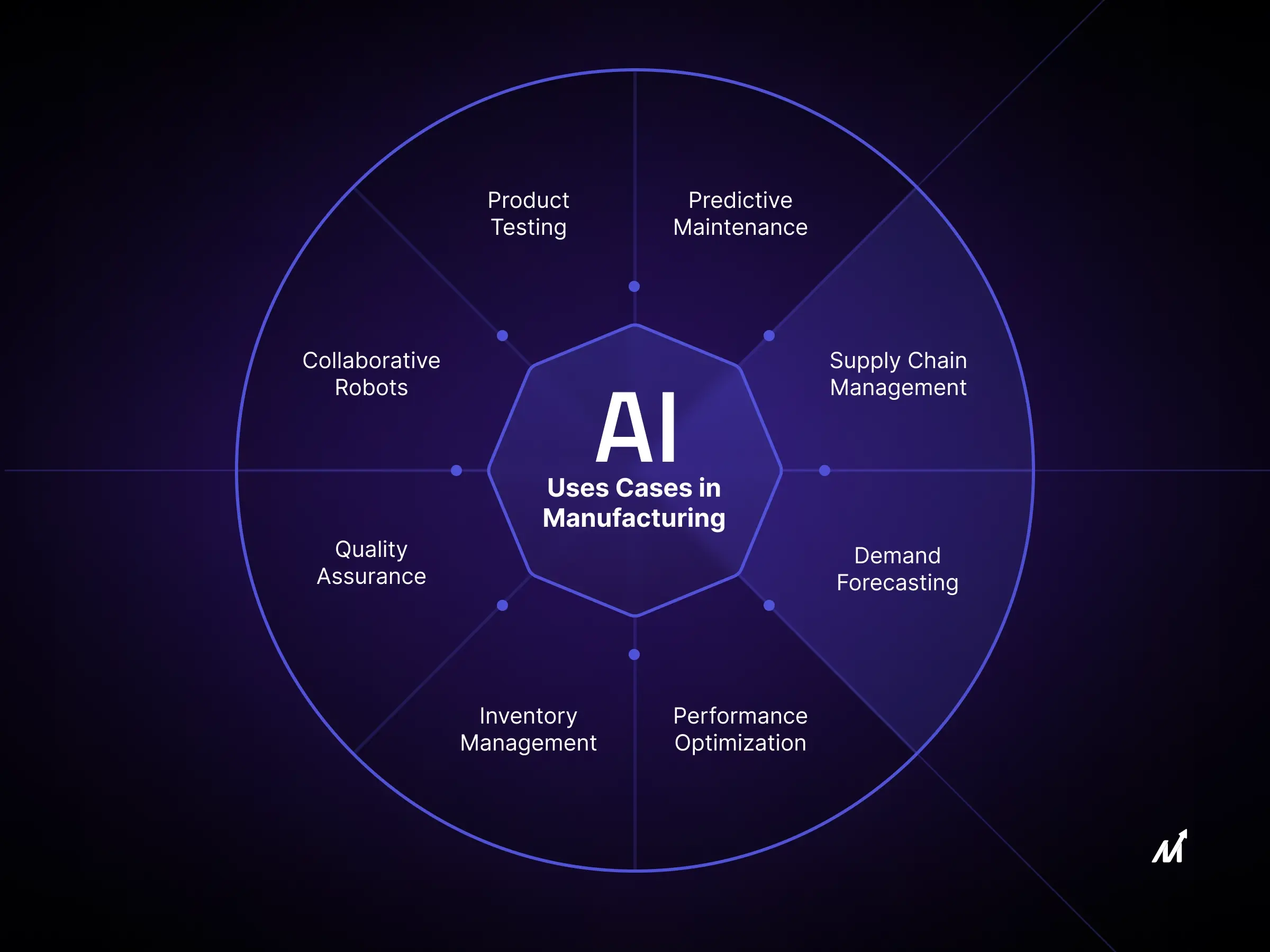

AI Tech Stack Engineering Partner – Markovate

We at Markovate are your partners in developing AI models and solutions tailored to your specific needs and have a solid basis in AI development. Our team at Markovate knows the full AI ecosystem, from machine learning techniques to data analytics.

We use cutting-edge AI Technology Stack, which includes data-focused engines, like Apache Spark and Hadoop, when you collaborate with us as a partner. TensorFlow, PyTorch, and other cutting-edge machine learning frameworks work with these to provide insights that inform and drive decision-making.

Integrating cloud services? We cover it, too. To ensure your AI applications grow and function efficiently, we collaborate with the top cloud computing service providers.

So why choose Markovate? We provide more than simply AI services and technology. Our team offers a road plan for the future whether it’s generative AI or deep learning or Machine Learning, one in which your company will not only adapt to change but also benefit from it. We aren’t simply meeting the industry standards but setting them. Join forces with us, and let’s create future AI.

AI Tech Stack: Frequently Asked Queries

1. What is the AI Tech Stack?

The AI Tech Stack is a comprehensive ensemble of distinct but interoperable technologies, platforms, and methodologies that facilitate the architecture, orchestration, and operationalization of artificial intelligence applications.

2. What are the Constituent Elements of the AI Technology Ecosystem?

The technological backbone of AI is organized around several layers. These include a data orchestration tier and a machine learning algorithmic layer. Additionally, there are layers for advanced neural networks, computer vision, robotics, foundational AI infrastructure, and natural language interpretation mechanisms.

3. How to do Security and Privacy Safeguarding in AI Tech Stack?

In security and data integrity, AI stacks deploy specialized behavioral analytics algorithms that model user interactions to detect unnatural patterns. Additionally, machine learning models identify malicious software and auto-execute defensive protocols to mitigate cyber threats.

4. What are Standard Technologies Incorporated in AI Tech Stack?

The AI tech stack integrates Apache Spark and Hadoop for robust data curation, inspection, and transformation, optimizing analytical utility. Moreover, this approach amplifies the stack’s data visualization and exploratory data analysis efficiency.

5. What Cloud Ecosystems Commonly Leveraged in AI Tech Stacks?

AI tech stacks use cloud solutions with specialized AI services to optimize data scalability, resource allocation, and application efficiency. Additionally, these platforms commonly include Amazon Web Services, Microsoft Azure, & Google Cloud Platform (GCP).

6. What are Prevalent Machine Learning Frameworks in AI Ecosystems?

The AI stack commonly uses machine learning frameworks to facilitate predictive analytics based on probabilistic models and statistical theory. Some of the most ubiquitously employed frameworks for this purpose encompass TensorFlow, PyTorch, Scikit-Learn, Spark ML, Torch, and Keras. These frameworks function as the neural synapses of the AI, converting data into actionable insights through complex computations.